Akshay Agrawal

Building marimo.io

PhD in Electrical Engineering,

Stanford University

MS, BS in Computer Science,

Stanford University

akshayka@cs.stanford.edu

Github /

Google Scholar /

Twitter /

LinkedIn /

Blog

I'm currently building marimo, a new kind of reactive notebook for Python that's reproducible, git-friendly (stored as Python files), executable as a script, and deployable as an app.

I'm both a researcher, focusing on machine learning and optimization, and an engineer, having contributed to several open source projects (including TensorFlow, when I worked at Google). I have a PhD from Stanford University, where I was advised by Stephen Boyd (as well as a BS and MS in computer science from Stanford).

Software

I build open source software designed to make machine learning and math actionable and accessible. Below are some of my projects.marimo is a next-generation reactive Python notebook that's reproducible, git-friendly (stored as Python files), executable as a script, and deployable as an app.

Papers

*denotes alphabetical ordering of authors2022

- Allocation of fungible resources via a fast, scalable price discovery method. [bibtex] [code]

- A. Agrawal, S. Boyd, D. Narayanan, F. Kazhamiaka, M. Zaharia. Mathematical Programming Computation.

- Embedded code generation with CVXPY. [bibtex] [code]

- M. Schaller, G. Banjac, S. Diamond, A. Agrawal, B. Stellato, S. Boyd. Pre-print.

2021

- Computing tighter bounds on the n-Queen's Constant via Newton's Method. [bibtex] [code]

- P. Nobel, A. Agrawal, and S. Boyd. Pre-print

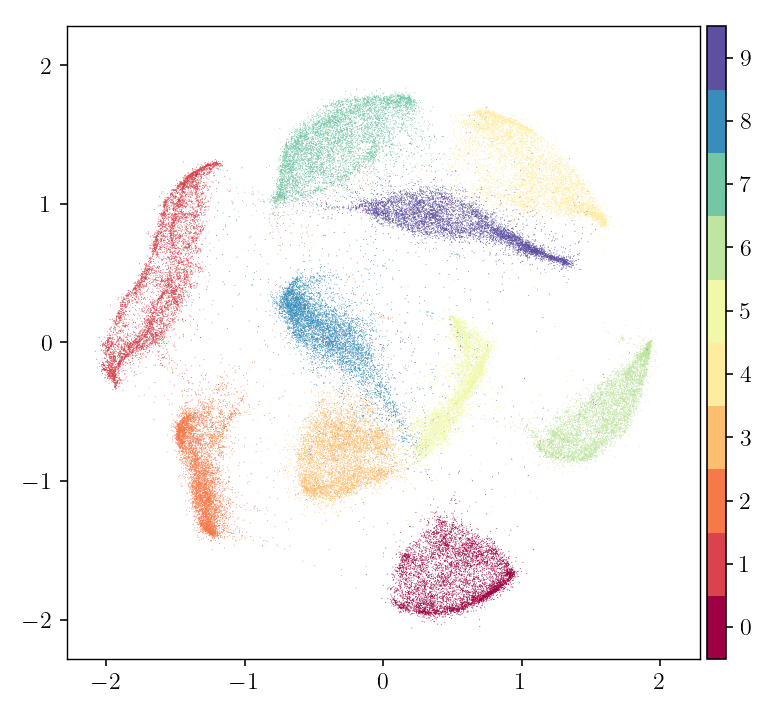

- Minimum-distortion embedding. [bibtex] [slides] [code]

- A. Agrawal, A. Ali, and S. Boyd. Foundations and Trends in Machine Learning.

- Constant function market makers: Multi-asset trades via convex optimization [bibtex]

- G. Angeris, A. Agrawal, A. Evans, T. Chitra, and S. Boyd. Pre-print.

2020

- Learning convex optimization models. [bibtex] [code]

- A. Agrawal, S. Barratt, and S. Boyd.* IEEE/CAA Journal of Automatica Sinica.

- Differentiating through log-log convex programs. [bibtex] [poster] [code]

- A. Agrawal and S. Boyd. Pre-print.

- Learning convex optimization control policies. [bibtex] [code]

- A. Agrawal, S. Barratt, S. Boyd, B. Stellato.* Learning for Dynamics and Control (L4DC), oral presentation.

- Disciplined quasiconvex programming. [bibtex] [code]

- A. Agrawal and S. Boyd. Optimization Letters.

2019

- Differentiable convex optimization layers. [bibtex] [code] [blog post]

- A. Agrawal, B. Amos, S. Barratt, S. Boyd, S. Diamond, and J. Z. Kolter.* In Advances in Neural Information Processing Systems (NeurIPS).

- Presented at the TensorFlow Developer Summit 2020, Sunnyvale [slides] [video]

- Differentiating through a cone program. [bibtex] [code]

- A. Agrawal, S. Barratt, S. Boyd, E. Busseti, W. Moursi.* Journal of Applied and Numerical Optimization.

- TensorFlow Eager: A multi-stage, Python-embedded DSL for machine learning. [bibtex] [slides] [blog post] [code]

- A. Agrawal, A. N. Modi, A. Passos, A. Lavoie, A. Agarwal, A. Shankar, I. Ganichev, J. Levenberg, M. Hong, R. Monga, S. Cai.* Systems for Machine Learning (SysML).

- Disciplined geometric programming. [bibtex] [tutorial] [poster] [code]

- A. Agrawal, S. Diamond, S. Boyd. Optimization Letters.

- Presented at ICCOPT 2019, Berlin [slides]

2018

- A rewriting system for convex optimization problems. [bibtex] [slides] [code]

- A. Agrawal, R. Verschueren, S. Diamond, S. Boyd. Journal of Control and Decision.

2015

- YouEDU: Addressing confusion in MOOC discussion forums by recommending instructional video clips. [bibtex] [dataset] [code]

- A. Agrawal, J. Venkatraman, S. Leonard, and A. Paepcke. Educational Data Mining.

- Presented at EDM 2015, Madrid [slides]

Industry

I enjoy speaking with people working on real problems. If you'd like to chat, don't hesitate to reach out over email.

I have industry experience in designing and building software for machine learning (TensorFlow 2.0), optimizing the scheduling of containers in shared datacenters, motion planning and control for autonomous vehicles, and performance analysis of Google-scale software systems.

From 2017-2018, I worked on TensorFlow as an engineer on Google Brain team. Specifically, I developed a multi-stage programming model that lets users enjoy eager (imperative) execution while providing them the option to optimize blocks of TensorFlow operations via just-in-time compilation.

I honed my technical infrastructure skills over the course of four summer internships at Google, where I:

conducted fleet-wide performance analyses of programs in shared servers and datacenters;

analyzed Dapper traces for the distributed storage stack and uncovered major performance bugs;

built a simulator for solid-state drives and investigated garbage reduction policies;

wrote test suites and tools for the Linux production kernel.

Teaching

I spent seven quarters as a teaching assistant for the following Stanford courses:

EE 364a: Convex Optimization I. Professor Stephen Boyd. Spring 2016-17, Summer 2018-2019.

CS 221: Artificial Intelligence, Principles and Techniques. Professor Percy Liang. Autumn 2016-17.

CS 109: Probability for Computer Scientists. Professor Mehran Sahami and Lecturer Chris Piech. Winter 2015-16, Spring 2015-16, Winter 2016-17.

CS 106A: Programming Methodology. Section Leader. Lecturer Keith Schwarz. Winter 2013-14.

Essays

Paths to the Future: A Year at Google Brain. January 2020.

A Primer on TensorFlow 2.0. April 2019.

Learning about Learning: Machine Learning and MOOCs. June 2015.

Machines that Learn: Making Distributed Storage Smarter. Sept. 2014.

Technical Reports

Separation Theorems. Lecture notes on separation theorems in convex analysis. A. Agrawal. 2019.

A Cutting-Plane, Alternating Projections Algorithm for Conic Optimization Problems. A. Agrawal. 2017. [code]

Cosine Siamese Models for Stance Detection. A. Agrawal, D. Chin, K. Chen. 2017. [code]

Xavier : A Reinforcement-Learning Approach to TCP Congestion Control. A. Agrawal. 2016. [code]

B-CRAM: A Byzantine-Fault-Tolerant Challenge-Response Authentication Mechanism. A. Agrawal, R. Gasparyan, J. Shin. 2015. [code]